How does useState() work internally in React?

I bet you are familiar with useState() in React.

The basic counter app shows how useState() enables us to add state to our component. In this episode, we’ll figure out how useState() works internally by looking into its source code.

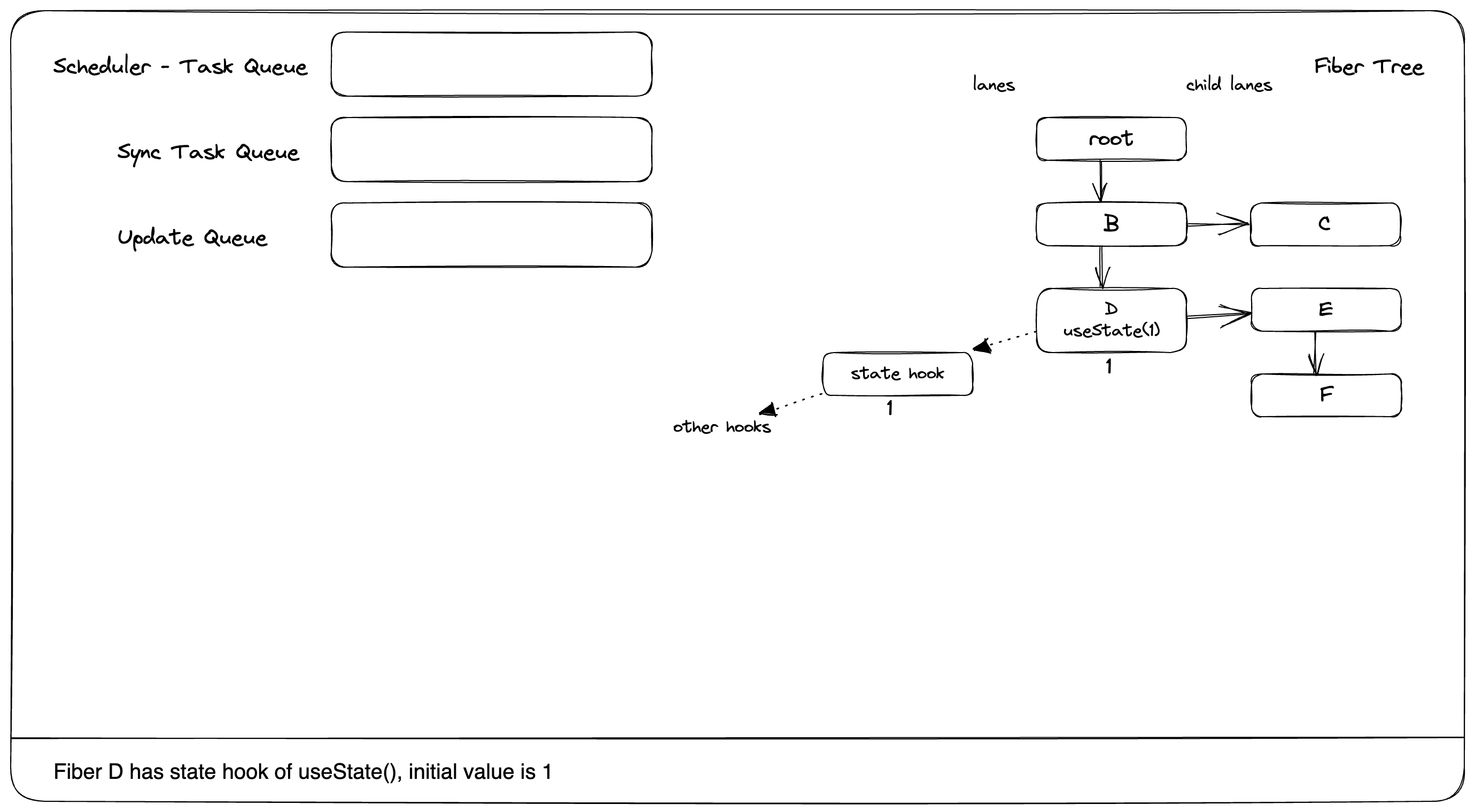

1. useState() in initial render(mount)

The initial render is pretty straightforward.

A new hook is created

if (typeof initialState === 'function') {initialState = initialState();}hook.memoizedState = hook.baseState = initialState;memoizedState on hook holds the actual state value

const queue: UpdateQueue<S, BasicStateAction<S>> = {Update queue is to hold the future state updates

Keep in mind that when setting state, the state value is not updated right away.

This is because updates might have different priorities, they don't need to

be processed right away, for more refer to what are Lanes in React

Thus we need to stash the updates and then process them later

pending: null,lanes: NoLanes,lanes are the priority.

for more, refer to What are Lanes in React

dispatch: null,lastRenderedReducer: basicStateReducer,lastRenderedState: (initialState: any),};hook.queue = queue;keep in mind that this queue is the update queue for the hook

const dispatch: Dispatch<BasicStateAction<S>,> = (queue.dispatch = (dispatchSetState.bind(----------------This dispatchSetState() is actually what we get for the state setter.

Notice that it is bound to the current fiber.

null,currentlyRenderingFiber,queue,): any));return [hook.memoizedState, dispatch];------------------------------Here is the familiar syntax we get from useState()

}

A new hook is created

if (typeof initialState === 'function') {initialState = initialState();}hook.memoizedState = hook.baseState = initialState;memoizedState on hook holds the actual state value

const queue: UpdateQueue<S, BasicStateAction<S>> = {Update queue is to hold the future state updates

Keep in mind that when setting state, the state value is not updated right away.

This is because updates might have different priorities, they don't need to

be processed right away, for more refer to what are Lanes in React

Thus we need to stash the updates and then process them later

pending: null,lanes: NoLanes,lanes are the priority.

for more, refer to What are Lanes in React

dispatch: null,lastRenderedReducer: basicStateReducer,lastRenderedState: (initialState: any),};hook.queue = queue;keep in mind that this queue is the update queue for the hook

const dispatch: Dispatch<BasicStateAction<S>,> = (queue.dispatch = (dispatchSetState.bind(----------------This dispatchSetState() is actually what we get for the state setter.

Notice that it is bound to the current fiber.

null,currentlyRenderingFiber,queue,): any));return [hook.memoizedState, dispatch];------------------------------Here is the familiar syntax we get from useState()

}

2. What happens in setState()?

From above code, we know that setState() is actually internally a bound dispatchSetState().

This defines the priority of the update.

For more, refer to What are Lanes in React

const update: Update<S, A> = {lane,action,hasEagerState: false,eagerState: null,next: (null: any),};Yep, here is the update object that is going to be stashed

if (isRenderPhaseUpdate(fiber)) {we can setState during render, this is a useful pattern

well this could lead to infinite rendering, be careful.

enqueueRenderPhaseUpdate(queue, update);} else {const alternate = fiber.alternate;if (fiber.lanes === NoLanes &&(alternate === null || alternate.lanes === NoLanes)This condition check is for early bailout,

which means to do nothing if setting same state.

Bailout means stop going deeper to skip the re-render of a subtree,

it is inside of re-render,here it is to avoid scheduling re-render,

so early bailout

But the condition here actually is a hack,

a stricter rule than needed which means that

React tries to avoid scheduling re-render with best effort, but no guarantee

We'll cover this in detail in the caveat section

) {// The queue is currently empty, which means we can eagerly compute the---------------------------------------------------------------------// next state before entering the render phase. If the new state is the---------------------------------------------------------------------// same as the current state, we may be able to bail out entirely.----------------------------------------------------------------const lastRenderedReducer = queue.lastRenderedReducer;if (lastRenderedReducer !== null) {let prevDispatcher;try {const currentState: S = (queue.lastRenderedState: any);const eagerState = lastRenderedReducer(currentState, action);// Stash the eagerly computed state, and the reducer used to compute// it, on the update object. If the reducer hasn't changed by the// time we enter the render phase, then the eager state can be used// without calling the reducer again.update.hasEagerState = true;update.eagerState = eagerState;if (is(eagerState, currentState)) {// Fast path. We can bail out without scheduling React to re-render.// It's still possible that we'll need to rebase this update later,// if the component re-renders for a different reason and by that// time the reducer has changed.// TODO: Do we still need to entangle transitions in this case?enqueueConcurrentHookUpdateAndEagerlyBailout(fiber, queue, update);return;This return prevents updates from being scheduled.

}} catch (error) {// Suppress the error. It will throw again in the render phase.} finally {if (__DEV__) {ReactCurrentDispatcher.current = prevDispatcher;}}}}const root = enqueueConcurrentHookUpdate(fiber, queue, update, lane);---------------------------This stashes the updates.

Updates are to be processed and attached to fibers at the beginning of an actual re-render

if (root !== null) {const eventTime = requestEventTime();scheduleUpdateOnFiber(root, fiber, lane, eventTime);---------------------This schedules the re-render, notice that re-render doesn't happen right away

The actual scheduling depends on React scheduler.

entangleTransitionUpdate(root, queue, lane);}}}

This defines the priority of the update.

For more, refer to What are Lanes in React

const update: Update<S, A> = {lane,action,hasEagerState: false,eagerState: null,next: (null: any),};Yep, here is the update object that is going to be stashed

if (isRenderPhaseUpdate(fiber)) {we can setState during render, this is a useful pattern

well this could lead to infinite rendering, be careful.

enqueueRenderPhaseUpdate(queue, update);} else {const alternate = fiber.alternate;if (fiber.lanes === NoLanes &&(alternate === null || alternate.lanes === NoLanes)This condition check is for early bailout,

which means to do nothing if setting same state.

Bailout means stop going deeper to skip the re-render of a subtree,

it is inside of re-render,here it is to avoid scheduling re-render,

so early bailout

But the condition here actually is a hack,

a stricter rule than needed which means that

React tries to avoid scheduling re-render with best effort, but no guarantee

We'll cover this in detail in the caveat section

) {// The queue is currently empty, which means we can eagerly compute the---------------------------------------------------------------------// next state before entering the render phase. If the new state is the---------------------------------------------------------------------// same as the current state, we may be able to bail out entirely.----------------------------------------------------------------const lastRenderedReducer = queue.lastRenderedReducer;if (lastRenderedReducer !== null) {let prevDispatcher;try {const currentState: S = (queue.lastRenderedState: any);const eagerState = lastRenderedReducer(currentState, action);// Stash the eagerly computed state, and the reducer used to compute// it, on the update object. If the reducer hasn't changed by the// time we enter the render phase, then the eager state can be used// without calling the reducer again.update.hasEagerState = true;update.eagerState = eagerState;if (is(eagerState, currentState)) {// Fast path. We can bail out without scheduling React to re-render.// It's still possible that we'll need to rebase this update later,// if the component re-renders for a different reason and by that// time the reducer has changed.// TODO: Do we still need to entangle transitions in this case?enqueueConcurrentHookUpdateAndEagerlyBailout(fiber, queue, update);return;This return prevents updates from being scheduled.

}} catch (error) {// Suppress the error. It will throw again in the render phase.} finally {if (__DEV__) {ReactCurrentDispatcher.current = prevDispatcher;}}}}const root = enqueueConcurrentHookUpdate(fiber, queue, update, lane);---------------------------This stashes the updates.

Updates are to be processed and attached to fibers at the beginning of an actual re-render

if (root !== null) {const eventTime = requestEventTime();scheduleUpdateOnFiber(root, fiber, lane, eventTime);---------------------This schedules the re-render, notice that re-render doesn't happen right away

The actual scheduling depends on React scheduler.

entangleTransitionUpdate(root, queue, lane);}}}

Let’s take a furthur look at how the update objects are handled.

this function is called inside prepareFreshStack(),

which is one of the initial steps of a re-render

this says that before a re-render actual begins, all state updates are stashed

const endIndex = concurrentQueuesIndex;concurrentQueuesIndex = 0;concurrentlyUpdatedLanes = NoLanes;let i = 0;while (i < endIndex) {const fiber: Fiber = concurrentQueues[i];concurrentQueues[i++] = null;const queue: ConcurrentQueue = concurrentQueues[i];concurrentQueues[i++] = null;const update: ConcurrentUpdate = concurrentQueues[i];concurrentQueues[i++] = null;const lane: Lane = concurrentQueues[i];concurrentQueues[i++] = null;if (queue !== null && update !== null) {const pending = queue.pending;if (pending === null) {// This is the first update. Create a circular list.update.next = update;} else {update.next = pending.next;pending.next = update;}queue.pending = update;Remember the hook.queue we mentioned before ?

Here we see the stashed updates are finally attached to fiber here,

meaning to get ready for being processed

}if (lane !== NoLane) {markUpdateLaneFromFiberToRoot(fiber, update, lane);Also pay attention to this function call, which marks the fiber node path dirty

For more, refer to the diagram in How does React bailout work in reconciliation.

}}}function enqueueUpdate(fiber: Fiber,queue: ConcurrentQueue | null,update: ConcurrentUpdate | null,lane: Lane,) {// Don't update the `childLanes` on the return path yet. If we already in// the middle of rendering, wait until after it has completed.concurrentQueues[concurrentQueuesIndex++] = fiber;concurrentQueues[concurrentQueuesIndex++] = queue;concurrentQueues[concurrentQueuesIndex++] = update;concurrentQueues[concurrentQueuesIndex++] = lane;internally the updates are kept in a list

like a message queue, which are processed in batches

concurrentlyUpdatedLanes = mergeLanes(concurrentlyUpdatedLanes, lane);// The fiber's `lane` field is used in some places to check if any work is// scheduled, to perform an eager bailout, so we need to update it immediately.// TODO: We should probably move this to the "shared" queue instead.fiber.lanes = mergeLanes(fiber.lanes, lane);const alternate = fiber.alternate;if (alternate !== null) {alternate.lanes = mergeLanes(alternate.lanes, lane);}We see that both current and alternate fibers are marked dirty.

This is important to understand the caveat.

}export function enqueueConcurrentHookUpdate<S, A>(fiber: Fiber,queue: HookQueue<S, A>,update: HookUpdate<S, A>,lane: Lane,): FiberRoot | null {const concurrentQueue: ConcurrentQueue = (queue: any);const concurrentUpdate: ConcurrentUpdate = (update: any);enqueueUpdate(fiber, concurrentQueue, concurrentUpdate, lane);-------------------------------------------------------------return getRootForUpdatedFiber(fiber);}

this function is called inside prepareFreshStack(),

which is one of the initial steps of a re-render

this says that before a re-render actual begins, all state updates are stashed

const endIndex = concurrentQueuesIndex;concurrentQueuesIndex = 0;concurrentlyUpdatedLanes = NoLanes;let i = 0;while (i < endIndex) {const fiber: Fiber = concurrentQueues[i];concurrentQueues[i++] = null;const queue: ConcurrentQueue = concurrentQueues[i];concurrentQueues[i++] = null;const update: ConcurrentUpdate = concurrentQueues[i];concurrentQueues[i++] = null;const lane: Lane = concurrentQueues[i];concurrentQueues[i++] = null;if (queue !== null && update !== null) {const pending = queue.pending;if (pending === null) {// This is the first update. Create a circular list.update.next = update;} else {update.next = pending.next;pending.next = update;}queue.pending = update;Remember the hook.queue we mentioned before ?

Here we see the stashed updates are finally attached to fiber here,

meaning to get ready for being processed

}if (lane !== NoLane) {markUpdateLaneFromFiberToRoot(fiber, update, lane);Also pay attention to this function call, which marks the fiber node path dirty

For more, refer to the diagram in How does React bailout work in reconciliation.

}}}function enqueueUpdate(fiber: Fiber,queue: ConcurrentQueue | null,update: ConcurrentUpdate | null,lane: Lane,) {// Don't update the `childLanes` on the return path yet. If we already in// the middle of rendering, wait until after it has completed.concurrentQueues[concurrentQueuesIndex++] = fiber;concurrentQueues[concurrentQueuesIndex++] = queue;concurrentQueues[concurrentQueuesIndex++] = update;concurrentQueues[concurrentQueuesIndex++] = lane;internally the updates are kept in a list

like a message queue, which are processed in batches

concurrentlyUpdatedLanes = mergeLanes(concurrentlyUpdatedLanes, lane);// The fiber's `lane` field is used in some places to check if any work is// scheduled, to perform an eager bailout, so we need to update it immediately.// TODO: We should probably move this to the "shared" queue instead.fiber.lanes = mergeLanes(fiber.lanes, lane);const alternate = fiber.alternate;if (alternate !== null) {alternate.lanes = mergeLanes(alternate.lanes, lane);}We see that both current and alternate fibers are marked dirty.

This is important to understand the caveat.

}export function enqueueConcurrentHookUpdate<S, A>(fiber: Fiber,queue: HookQueue<S, A>,update: HookUpdate<S, A>,lane: Lane,): FiberRoot | null {const concurrentQueue: ConcurrentQueue = (queue: any);const concurrentUpdate: ConcurrentUpdate = (update: any);enqueueUpdate(fiber, concurrentQueue, concurrentUpdate, lane);-------------------------------------------------------------return getRootForUpdatedFiber(fiber);}

Notice that lanes are updated for both current fiber and alternate fiber

remember we mentioned that the dispatchSetState() is bound to the source fiber

so when we set state, we might not be always updating the current fiber tree.

Setting both of them make sure everything right, but this has side effect

we'll cover this again in caveat section

// Walk the parent path to the root and update the child lanes.-------------------------------------------------------------for more refer to how React bailout works

let isHidden = false;let parent = sourceFiber.return;let node = sourceFiber;while (parent !== null) {parent.childLanes = mergeLanes(parent.childLanes, lane);alternate = parent.alternate;if (alternate !== null) {alternate.childLanes = mergeLanes(alternate.childLanes, lane);}if (parent.tag === OffscreenComponent) {const offscreenInstance: OffscreenInstance = parent.stateNode;if (offscreenInstance.isHidden) {isHidden = true;}}node = parent;parent = parent.return;}if (isHidden && update !== null && node.tag === HostRoot) {const root: FiberRoot = node.stateNode;markHiddenUpdate(root, update, lane);}}

Notice that lanes are updated for both current fiber and alternate fiber

remember we mentioned that the dispatchSetState() is bound to the source fiber

so when we set state, we might not be always updating the current fiber tree.

Setting both of them make sure everything right, but this has side effect

we'll cover this again in caveat section

// Walk the parent path to the root and update the child lanes.-------------------------------------------------------------for more refer to how React bailout works

let isHidden = false;let parent = sourceFiber.return;let node = sourceFiber;while (parent !== null) {parent.childLanes = mergeLanes(parent.childLanes, lane);alternate = parent.alternate;if (alternate !== null) {alternate.childLanes = mergeLanes(alternate.childLanes, lane);}if (parent.tag === OffscreenComponent) {const offscreenInstance: OffscreenInstance = parent.stateNode;if (offscreenInstance.isHidden) {isHidden = true;}}node = parent;parent = parent.return;}if (isHidden && update !== null && node.tag === HostRoot) {const root: FiberRoot = node.stateNode;markHiddenUpdate(root, update, lane);}}

This is the only line we need to care for scheduleUpdateOnFiber().

It makes sure the re-render is scheduled if there are pending updates.

The updates are not yet processed since the actual re-render haven't started yet

The actual start of re-render depends on a few factors like the category of events,

and status of scheduler.

We've met this function a lot of times,

you can refer to How does useTransition() work internally to know more.

if (lane === SyncLane &&executionContext === NoContext &&(fiber.mode & ConcurrentMode) === NoMode &&// Treat `act` as if it's inside `batchedUpdates`, even in legacy mode.!(__DEV__ && ReactCurrentActQueue.isBatchingLegacy)) {// Flush the synchronous work now, unless we're already working or inside// a batch. This is intentionally inside scheduleUpdateOnFiber instead of// scheduleCallbackForFiber to preserve the ability to schedule a callback// without immediately flushing it. We only do this for user-initiated// updates, to preserve historical behavior of legacy mode.resetRenderTimer();flushSyncCallbacksOnlyInLegacyMode();}}}

This is the only line we need to care for scheduleUpdateOnFiber().

It makes sure the re-render is scheduled if there are pending updates.

The updates are not yet processed since the actual re-render haven't started yet

The actual start of re-render depends on a few factors like the category of events,

and status of scheduler.

We've met this function a lot of times,

you can refer to How does useTransition() work internally to know more.

if (lane === SyncLane &&executionContext === NoContext &&(fiber.mode & ConcurrentMode) === NoMode &&// Treat `act` as if it's inside `batchedUpdates`, even in legacy mode.!(__DEV__ && ReactCurrentActQueue.isBatchingLegacy)) {// Flush the synchronous work now, unless we're already working or inside// a batch. This is intentionally inside scheduleUpdateOnFiber instead of// scheduleCallbackForFiber to preserve the ability to schedule a callback// without immediately flushing it. We only do this for user-initiated// updates, to preserve historical behavior of legacy mode.resetRenderTimer();flushSyncCallbacksOnlyInLegacyMode();}}}

3. useState() in re-render

After updates are stashed, now it is time to actually run the updates and update the state value.

This happens actually in the useState() in re-render.

This gives us the previously created hook, so we can get the value from it

const queue = hook.queue;Remember is the update queue holding all the updates

since useState() is called after re-render begins, stashed updates are moved to fibers

if (queue === null) {throw new Error('Should have a queue. This is likely a bug in React. Please file an issue.',);}queue.lastRenderedReducer = reducer;const current: Hook = (currentHook: any);// The last rebase update that is NOT part of the base state.let baseQueue = current.baseQueue;baseQueue needs some explanation.

For the best case, we can just ditch the updates when they are processed.

But because of possible multiple updates of different priorities,

we might need to skip some of them for later process

that's why they are stored in baseQueue.

Also even for updates that are processed, to make sure final state is right,

once an update is put in the baseQueue, all following updates must be there as well

e.g state value is

1, we have 3 updates,+1(low),*10(high),-2(low)Because *10 is high priority, we process it,

1 * 10 = 10Later we process low priority ones,

if we don't put

*10in the queue, it will be1 + 1 - 2 = 0But we need is

(1 + 1) * 10 - 2.// The last pending update that hasn't been processed yet.const pendingQueue = queue.pending;if (pendingQueue !== null) {// We have new updates that haven't been processed yet.// We'll add them to the base queue.if (baseQueue !== null) {// Merge the pending queue and the base queue.const baseFirst = baseQueue.next;const pendingFirst = pendingQueue.next;baseQueue.next = pendingFirst;pendingQueue.next = baseFirst;}current.baseQueue = baseQueue = pendingQueue;queue.pending = null;pending queue is cleared and merged into baseQueue

}if (baseQueue !== null) {// We have a queue to process.const first = baseQueue.next;let newState = current.baseState;let newBaseState = null;let newBaseQueueFirst = null;let newBaseQueueLast = null;After processing the baseQueue, a new baseQueue is generated

let update = first;do {This do...while loop tries to process all updates

...// Check if this update was made while the tree was hidden. If so, then// it's not a "base" update and we should disregard the extra base lanes// that were added to renderLanes when we entered the Offscreen tree.const shouldSkipUpdate = isHiddenUpdate? !isSubsetOfLanes(getWorkInProgressRootRenderLanes(), updateLane): !isSubsetOfLanes(renderLanes, updateLane);if (shouldSkipUpdate) {// Priority is insufficient. Skip this update. If this is the first-------------------------------------------------------------------// skipped update, the previous update/state is the new base-----------------------------------------------------------// update/state.--------------yep, the low priority thing

const clone: Update<S, A> = {lane: updateLane,action: update.action,hasEagerState: update.hasEagerState,eagerState: update.eagerState,next: (null: any),};if (newBaseQueueLast === null) {newBaseQueueFirst = newBaseQueueLast = clone;newBaseState = newState;} else {newBaseQueueLast = newBaseQueueLast.next = clone;}The update is put in the new baseQueue because it is not processed

// Update the remaining priority in the queue.// TODO: Don't need to accumulate this. Instead, we can remove// renderLanes from the original lanes.currentlyRenderingFiber.lanes = mergeLanes(currentlyRenderingFiber.lanes,updateLane,);markSkippedUpdateLanes(updateLane);} else {// This update does have sufficient priority.if (newBaseQueueLast !== null) {const clone: Update<S, A> = {// This update is going to be committed so we never want uncommit// it. Using NoLane works because 0 is a subset of all bitmasks, so// this will never be skipped by the check above.lane: NoLane,action: update.action,hasEagerState: update.hasEagerState,eagerState: update.eagerState,next: (null: any),};newBaseQueueLast = newBaseQueueLast.next = clone;}a|As explained before about baseQueue,

here it says once newBaseQueue is not empty,

all the following updates must be stashed, for future use

// Process this update.if (update.hasEagerState) {// If this update is a state update (not a reducer) and was processed eagerly,// we can use the eagerly computed statenewState = ((update.eagerState: any): S);} else {const action = update.action;newState = reducer(newState, action);}}update = update.next;} while (update !== null && update !== first);if (newBaseQueueLast === null) {newBaseState = newState;} else {newBaseQueueLast.next = (newBaseQueueFirst: any);}// Mark that the fiber performed work, but only if the new state is// different from the current state.if (!is(newState, hook.memoizedState)) {markWorkInProgressReceivedUpdate();----------------------------------This will actually bailout(NOT early bailout) if no state changes during re-rendering.

}hook.memoizedState = newState;Finally new state is set

hook.baseState = newBaseState;hook.baseQueue = newBaseQueueLast;new baseQueue is set for next round of re-render

queue.lastRenderedState = newState;}if (baseQueue === null) {// `queue.lanes` is used for entangling transitions. We can set it back to// zero once the queue is empty.queue.lanes = NoLanes;}const dispatch: Dispatch<A> = (queue.dispatch: any);return [hook.memoizedState, dispatch];-----------------------------Now we have the new state! and dispatch() is stable!

}

This gives us the previously created hook, so we can get the value from it

const queue = hook.queue;Remember is the update queue holding all the updates

since useState() is called after re-render begins, stashed updates are moved to fibers

if (queue === null) {throw new Error('Should have a queue. This is likely a bug in React. Please file an issue.',);}queue.lastRenderedReducer = reducer;const current: Hook = (currentHook: any);// The last rebase update that is NOT part of the base state.let baseQueue = current.baseQueue;baseQueue needs some explanation.

For the best case, we can just ditch the updates when they are processed.

But because of possible multiple updates of different priorities,

we might need to skip some of them for later process

that's why they are stored in baseQueue.

Also even for updates that are processed, to make sure final state is right,

once an update is put in the baseQueue, all following updates must be there as well

e.g state value is

1, we have 3 updates,+1(low),*10(high),-2(low)Because *10 is high priority, we process it,

1 * 10 = 10Later we process low priority ones,

if we don't put

*10in the queue, it will be1 + 1 - 2 = 0But we need is

(1 + 1) * 10 - 2.// The last pending update that hasn't been processed yet.const pendingQueue = queue.pending;if (pendingQueue !== null) {// We have new updates that haven't been processed yet.// We'll add them to the base queue.if (baseQueue !== null) {// Merge the pending queue and the base queue.const baseFirst = baseQueue.next;const pendingFirst = pendingQueue.next;baseQueue.next = pendingFirst;pendingQueue.next = baseFirst;}current.baseQueue = baseQueue = pendingQueue;queue.pending = null;pending queue is cleared and merged into baseQueue

}if (baseQueue !== null) {// We have a queue to process.const first = baseQueue.next;let newState = current.baseState;let newBaseState = null;let newBaseQueueFirst = null;let newBaseQueueLast = null;After processing the baseQueue, a new baseQueue is generated

let update = first;do {This do...while loop tries to process all updates

...// Check if this update was made while the tree was hidden. If so, then// it's not a "base" update and we should disregard the extra base lanes// that were added to renderLanes when we entered the Offscreen tree.const shouldSkipUpdate = isHiddenUpdate? !isSubsetOfLanes(getWorkInProgressRootRenderLanes(), updateLane): !isSubsetOfLanes(renderLanes, updateLane);if (shouldSkipUpdate) {// Priority is insufficient. Skip this update. If this is the first-------------------------------------------------------------------// skipped update, the previous update/state is the new base-----------------------------------------------------------// update/state.--------------yep, the low priority thing

const clone: Update<S, A> = {lane: updateLane,action: update.action,hasEagerState: update.hasEagerState,eagerState: update.eagerState,next: (null: any),};if (newBaseQueueLast === null) {newBaseQueueFirst = newBaseQueueLast = clone;newBaseState = newState;} else {newBaseQueueLast = newBaseQueueLast.next = clone;}The update is put in the new baseQueue because it is not processed

// Update the remaining priority in the queue.// TODO: Don't need to accumulate this. Instead, we can remove// renderLanes from the original lanes.currentlyRenderingFiber.lanes = mergeLanes(currentlyRenderingFiber.lanes,updateLane,);markSkippedUpdateLanes(updateLane);} else {// This update does have sufficient priority.if (newBaseQueueLast !== null) {const clone: Update<S, A> = {// This update is going to be committed so we never want uncommit// it. Using NoLane works because 0 is a subset of all bitmasks, so// this will never be skipped by the check above.lane: NoLane,action: update.action,hasEagerState: update.hasEagerState,eagerState: update.eagerState,next: (null: any),};newBaseQueueLast = newBaseQueueLast.next = clone;}a|As explained before about baseQueue,

here it says once newBaseQueue is not empty,

all the following updates must be stashed, for future use

// Process this update.if (update.hasEagerState) {// If this update is a state update (not a reducer) and was processed eagerly,// we can use the eagerly computed statenewState = ((update.eagerState: any): S);} else {const action = update.action;newState = reducer(newState, action);}}update = update.next;} while (update !== null && update !== first);if (newBaseQueueLast === null) {newBaseState = newState;} else {newBaseQueueLast.next = (newBaseQueueFirst: any);}// Mark that the fiber performed work, but only if the new state is// different from the current state.if (!is(newState, hook.memoizedState)) {markWorkInProgressReceivedUpdate();----------------------------------This will actually bailout(NOT early bailout) if no state changes during re-rendering.

}hook.memoizedState = newState;Finally new state is set

hook.baseState = newBaseState;hook.baseQueue = newBaseQueueLast;new baseQueue is set for next round of re-render

queue.lastRenderedState = newState;}if (baseQueue === null) {// `queue.lanes` is used for entangling transitions. We can set it back to// zero once the queue is empty.queue.lanes = NoLanes;}const dispatch: Dispatch<A> = (queue.dispatch: any);return [hook.memoizedState, dispatch];-----------------------------Now we have the new state! and dispatch() is stable!

}

4. Summary

Here are some simple slides explaining the internals.

5. Understanding the caveats

React.dev has listed the caveats, let’s try to understand why they exist.

5.1 state update is not sync

The set function only updates the state variable for the next render. If you read the state variable after calling the set function, you will still get the old value that was on the screen before your call.

This is easy to understand, we’ve already seen how setState() schedules re-render in next tick, it is not sync

and the updated value can only be got from next render since the state update is done in useState() not in setState().

5.2 setState() with same value might still trigger re-render.

If the new value you provide is identical to the current state, as determined by an Object.is comparison, React will skip re-rendering the component and its children. This is an optimization. Although in some cases React may still need to call your component before skipping the children, it shouldn’t affect your code.

This is the trickiest caveat. Here is a quiz you can have a try.

I must say, it took me quite some time to figure out why re-render happens even when setting the same state value.

To understand this we must go back to the eager bailout condition inside dispatchSetState() where we didn’t dive deeper.

under these condition try to avoid scheduling re-render if state doesn't change

) {

under these condition try to avoid scheduling re-render if state doesn't change

) {

As explained in previous slides, the best check would be if the pending update queue and baseQueue for the hook are empty. But based on current implementation we are not able to know if it is true until we actually start the re-render.

So here it falls back to simpler check to see if there are no update on fiber nodes. Since we’ve seen that when update is enqueued the fiber will be marked dirty, we don’t need to wait for the re-render to start.

But there is a side-effect.

When enqueueing an update, here we see that both current and alternate fibers are marked dirty with lanes.

This is needed, because dispatchSetState() is bound to the source fiber, so we are not able to make sure

the update will be processed if we don’t update both current and alternate.

But the clearing of lanes only happens in beginWork(), which is the actual re-rendering.

This leads to the result that once an update is scheduled, the full clearing of the dirty lanes flags is only done after at least 2 rounds of re-rendering.

The steps are roughly like below.

- fiber1 (current, clean) / null (alternate) → fiber1 is the source fiber for

useState() setState(true)→ becausetrueis different fromfalse, early bailout doesn’t happen- fiber1 (current, dirty) / null (alternate) → enqueue updates

- fiber1 (current, dirty) / fiber2 (workInProgress, dirty) → rerender begin, created a new fiber as workInProgress

- fiber1 (current, dirty) / fiber2 (workInProgress, clean) → lanes cleared in

beginWork() - fiber1 (alternate, dirty) / fiber2 (current, clean) → after committing, React swaps the 2 version of fiber tree.

setState(true)→ because one of fibers are not clean, early bailout still doesn’t happen- fiber1 (alternate, dirty) / fiber2 (current, dirty) → enqueue updates

- fiber1 (workInProgress, dirty) / fiber2 (current, dirty) → rerender begin, fiber1 is assigned the lanes from fiber2

- fiber1 (workInProgress, clean) / fiber2 (current, dirty) → lanes cleared in

beginWork() - fiber1 (workInProgress, clean) / fiber2 (current, clean) → no state change found, lanes are removed for current fiber in

bailoutHooks(), and bailout(NOT early bailout) happens. - fiber1 (current, clean) / fiber2 (alternate, clean) → after committing, React swaps the 2 version of fiber tree.

setState(true)→ this time both fibers are clean and we can actually do early bailout!

I think there could be a fix for this? But maybe it cost too much because of fiber architecture and how hooks work. There was already some discussion from which we see no intention of fixing it from React team, since most of the time it doesn’t harm.

We should keep in mind that React re-renders if it feels necessary, we should not assume that performance tricks always work.

5.3 React batches state updates

React batches state updates. It updates the screen after all the event handlers have run and have called their set functions. This prevents multiple re-renders during a single event. In the rare case that you need to force React to update the screen earlier, for example to access the DOM, you can use flushSync.

As illustrated in the previous slides, updates are stashed before they are actually processed, then they will be processed together.

Want to know more about how React works internally?

Check out my series - React Internals Deep Dive!