A real example of concurrent mode in Shaku - switch to `useDeferredValue()` instead of throttling or debouncing.

1. Problem Statement

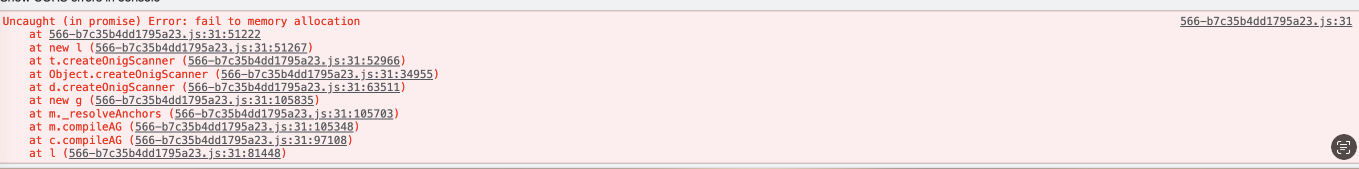

I’ve been building Shaku since this May and launched Shaku Playground and Shaku Snippet. In both apps, there is code editor on the left and preview on the right, like below.

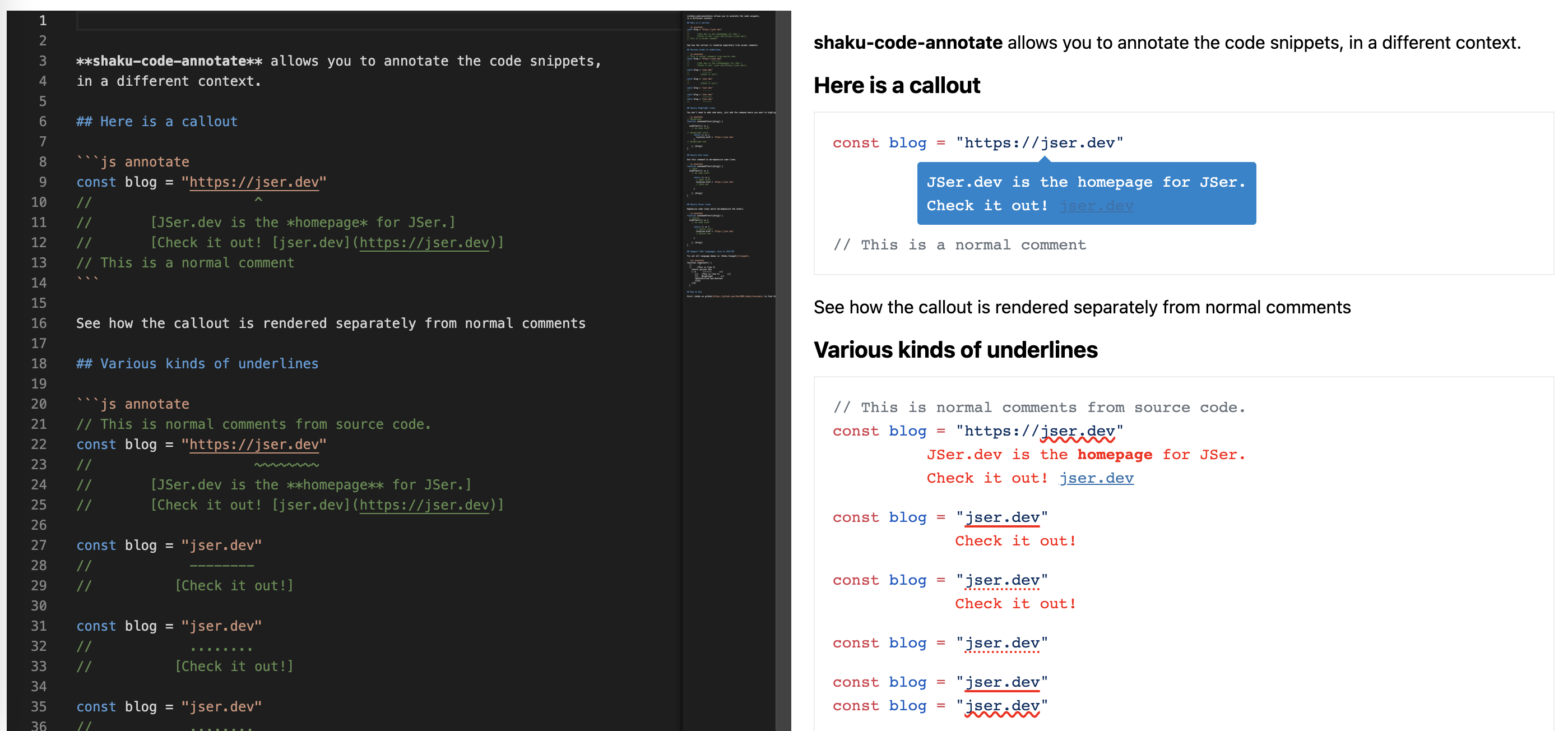

Time to time critical error happens - "Error: fail to memory allocation".

From the error stack, we can see it happens in the rendering of syntax highlighting. Because the parsing is heavy, it would be natural to assume that the frequent typing leads to the memory issue. The original code was like below.

Heavy process

setPreview(data.toString());}));}, [code]);------On each key press, code changes and the heavy process is triggered!

Heavy process

setPreview(data.toString());}));}, [code]);------On each key press, code changes and the heavy process is triggered!

2. Debouncing helps but creates bad UX.

A primitive improvement idea is to debounce the process, which is pretty straightforward.

All we need is a useDebouncedCallback().

js

js

And we are able to change the code to below.

diff

diff

Now the error is gone!

But now we have a new problem, the preview becomes laggy because of the fixed delay in debouncing. This is quite noticeable when we edit code or select a different language. Below is the screen recording showing the lag. This is clearly not great UX.

Also the process is async meaning racing problem could occur.

3. Chose useDeferredValue() over throttling or debouncing

I’ve explained how useDeferredValue() works internally,

it lowers the rendering priority and makes it interruptable in concurrent mode, we could be benefit from it.

The full code change is in this PR, I’ll explain the main parts.

Original process is nested promises, we first need to put them into a store and initialize them lazily.

This is a simple implementation of data fetcher.

For more, refer to How lazy() works internally in React?,

const processedResultStore = new Map<string, Fetcher<string>>();const getProcessedResult = (lang: string,code: string,processor: ReturnType<typeof remark>) => {const key = `${lang}|${code}`;if (!processedResultStore.has(key)) {processedResultStore.set(key,new Fetcher(() =>processor.process(`\`\`\`${lang} annotate\n${code}\n\`\`\``).then((data) => {return data.toString();})));if (processedResultStore.size > 5) {const firstResultKey = processedResultStore.entries().next().value.key;processedResultStore.delete(firstResultKey);}}return processedResultStore.get(key).fetch();};Two stores because of original nested promises.

This is a simple implementation of data fetcher.

For more, refer to How lazy() works internally in React?,

const processedResultStore = new Map<string, Fetcher<string>>();const getProcessedResult = (lang: string,code: string,processor: ReturnType<typeof remark>) => {const key = `${lang}|${code}`;if (!processedResultStore.has(key)) {processedResultStore.set(key,new Fetcher(() =>processor.process(`\`\`\`${lang} annotate\n${code}\n\`\`\``).then((data) => {return data.toString();})));if (processedResultStore.size > 5) {const firstResultKey = processedResultStore.entries().next().value.key;processedResultStore.delete(firstResultKey);}}return processedResultStore.get(key).fetch();};Two stores because of original nested promises.

And then we can remove the useEffect() to something similar to sync code.

diff

diff

The code is much cleaner now and also the UI is more responsive!

I didn’t investigate into the actual internals in our case, but here are my thoughts.

- If key presses are sparse, the original and last approach works the same since all work are done between key presses.

- If key presses are dense,

- In our original code where processing starts on each key press, too many processing work are triggered in

useEffect()callback, thus memory is bloated since alluseEffect()callbacks are to be run. - In our last implementation of concurrent mode, multiple re-render are scheduled on key presses, not

useEffect(). And when a re-render is scheduled, previous unfinished re-render is cancelled (for more, refer to my post about transition), which means there will be limited processing work at the same time.

- In our original code where processing starts on each key press, too many processing work are triggered in

Think about it like this: Suppose you are in a restaurant and constantly asking a waiter to update the order for you(which is not cool, don’t do it!), once order is being prepared in the kitchen, it is heavy work.

-

if under

useEffect(), since it is just some random task, waiter doesn’t know it is from the same person - you. When an order is being prepared and you request multiple times to change order, kitchen is too busy(blocked) and waiter just stack multiple orders and send all of them to kitchen once available, thus go over kitchen’s capacity. -

but if under concurrent mode, waiter knows its job is to make sure every table’s orders are updated, not just do some random task. When an order is being prepared and you request multiple times to change order, waiter knows to cancel previews requests and only keep the last order for same table, and thus kitchen is saved from fire.

Also we see from 2 that racing issue doesn’t exist at all!

Want to know more about how React works internally?

Check out my series - React Internals Deep Dive!